Using ‘AlexNet’, 64 GPUs in parallel achieve 27x the speed of a single GPU for, as far as Fujitsu can determine, the world’s fastest processing.

A conventional method to accelerate deep learning is to use multiple computers equipped with GPUs, networked and arranged in parallel.

However, as processors are added performance gains tail off because the time required to share data between computers increases when, according to the Labs, more than 10 computers are used at the same time.

The sharing technoogy was applied to the Caffe open-source deep learning framework.

To confirm effectiveness, Fujitsu Labs evaluated the technology on an AlexNet multi-layered neural network where, compared with one GPU, is achieved 14.7x with 16 GPUs and 27x with 64 GPUs.

“These are the world’s fastest processing speeds, representing an improvement in learning speeds of 46% for 16 GPUs and 71% for 64 GPUs,” said Fujitsu. “With this technology, machine learning that would have taken about a month on one computer can now be processed in about a day by running it on 64 GPUs in parallel.”

Deep learning is a technology that has greatly improved the accuracy of image, character and sound recognition compared to previous technologies, but in order to achieve this it must repeatedly learn from huge volumes of data, said Fujitsu, so a;gorithms that work across multiple GPUs have been developed.

Because there is an upper limit to the number of GPUs that can be installed in one computer, multiple computers have to be interconnected through a high-speed network

“However, shared data sizes and computation times vary, and operations are performed in order, simultaneously using the previous operating results. As a result, additional [wasted] waiting time is required in communication between computers.

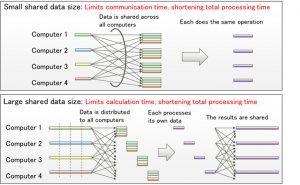

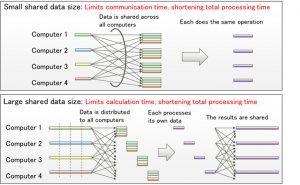

Fujitsu has done two things to limit the increase in waiting time between processing batches even with shared data of a variety of sizes:

Create supercomputer software that executes communications and operations simultaneously and in parallel.

Change processing methods according to the characteristics of the size of shared data and the sequence of deep learning processing. These two technologies.

This automatically controls the priority order for data transmission so that data needed at the start of the next learning process is shared among the computers in advance for multiple continuous operations (see diagram).

With existing technology (left), because the data sharing processing of the first layer, which is necessary to begin the next learning process, is carried out last, the data sharing processing delay is even longer.

With the new method (right), by carrying out the data sharing processing for the first layer during the data sharing processing for the second layer, the wait time until the start of the next learning process can be shortened.

Optimising operations for data size

Optimising operations for data size

For processing in which operation results are shared with all computers (top of diagram), when the original data volume is small, each computer shares data and then carries out the same operation, eliminating transmission time for the results.

When the data volume is large, processing is distributed and processing results are shared with the other computers for use in the following operations. By automatically assigning the optimal operational method based on the amount of data, this technology minimizes the total operation time (bottom).

The technology will be commercialised this year as part of Fujitsu’s Zinrai artificial intelligence tool set.

Applications are expected in developing unique neural network models for the autonomous control of robots and cars, for healthcare such as such as pathology classification, and for stock price forecasting in finance.

Electronics Weekly

Electronics Weekly