The modern world relies on digital technology to operate. That technology in turn depends on timing, specifically accurate time synchronisation. Power grids, defence systems, trading platforms, aircraft, autonomous vehicles – none of these can function safely and effectively without pinpoint timing.

The modern world relies on digital technology to operate. That technology in turn depends on timing, specifically accurate time synchronisation. Power grids, defence systems, trading platforms, aircraft, autonomous vehicles – none of these can function safely and effectively without pinpoint timing.

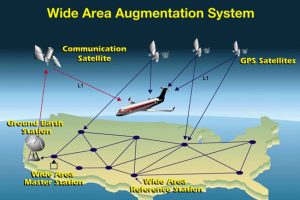

The science of timing took an evolutionary leap forwards in the early part of this century with the refinement of numerous positioning, navigation and timing technologies. In particular, improved global navigation satellite systems (GNSS) constellations emerged to provide the basis of global positioning system (GPS) services. GPS is more than just a car’s satnav system, it is the cornerstone of numerous governmental and commercial use cases where maximum precision in measuring time and location is mission-critical.

GPS effectively lets us transport time globally to an accuracy of within 15 billionths of a second. By deploying it, organisations across multiple sectors can sychronise to anywhere and everywhere on the planet. A tolerance of a little under one second, pre-GPS, is now down to nanoseconds. For example, in the IT sector, better timing and positioning have led to huge efficiencies in the creation of databases and in internet-based communications through better compression of data. As a result, in financial services, for example, bankers can trade at a far higher level of accuracy.

A fly in the GPS ointment

There is, however, a problem with GPS. Or perhaps that would better be described as a series of problems, some due to malicious interference and some down to unintended conflict with other technologies. The rising number of connected devices operating across an increasingly crowded RF spectrum has been bad news for all types of GNSS connectivity, all of which use satellite navigation signals that are vulnerable to distortion when set against other types of radio signal. Quality and accuracy of the positioning and timing of GNSS signals can be degraded or wiped out completely.

There are also many state-sponsored interests seeking to exploit and manipulate the frailty of GNSS and GPS to further military and political goals. The past few years have seen, for example, continuous interference with GPS signals in the Baltic region, along Nato’s border with Russia. Similar activity is taking place daily in areas of conflict, from Ukraine to Lebanon, causing alarm for governments but also for airlines, shipping lines and telecom providers.

Jamming and blocking of GPS signals requires little in the way of equipment or expertise and can have consequences for the billions of people who unthinkingly rely on it every day. The so-called ‘invisible utility’ is something people are scarcely aware of, but when it goes wrong, it is quickly apparent. While much of this interference is of the nature of a nuisance rather than a serious threat, it is taking a negative toll on people’s faith in GPS.

Effects of degradation

The consequences of degraded positioning and timing could vary in different vertical sectors.

For example, data centres need to communicate information across long distances millions of times per second and are responsible for processing critical transactions with exact timing. While timing has always been important to data centres and those who rely on them, today’s data facilities are more dispersed, interconnected and complex than ever. The world of commerce depends more and more on workloads stored and managed on public cloud platforms, driving the criticality of data centres up still further. Connectivity across this complex mesh of endpoints is a perpetual challenge, as is the drive to improve timing, synchronisation and resiliency, thereby ensuring data integrity. Without a failsafe way to handle timing, there is a high risk of eventual data corruption or loss on a disastrous scale.

Another sector is finance. Financial services institutions rely on tightly synchronised timing services in many ways; from fulfilling compliance requirements to operational analytics, market transparency and the automation of trades using algorithms.

Such is the nature of these high-frequency trading algorithms that just a few nanoseconds of inaccuracy can make or break the profitability of a transaction. Banks often deploy their own on-premise satellite receivers to gain advantage measured in infinitesimal fractions of a second. The vulnerability of GNSS and GPS has not gone unnoticed in these circles and the issue has been rapidly moving up the ranks of every bank’s risk assessment agenda. Governments and regulators are demanding that banks find reliable ways to ensure timing resilience and that they apply the same level of standards that would underpin critical national infrastructure.

The EU’s Markets in Financial Instruments Directive’s, which standardises the regulatory disclosures required for operating within the EU, makes precise timing a regulatory requirement. It mandates that all transactions must be recorded in co-ordinated universal time (UTC).

New time infrastructures

UTC is the source of time not just in the world of banks and forex traders, but in numerous commercial and non-commercial spheres where reliability of timing matters. An appetite is emerging for UTC-compatible timing that is inherently less vulnerable than GNSS-based ones, replacing or running alongside them, augmenting performance and accuracy.

An earthbound system of timing, rather than one founded on an unreliable satellite connection, seems to be the way forward.

A number of bodies around the world are at work on new time infrastructures. The National Institute of Standards and Technology in the US is one example. There is also the UK-based National Timing Centre (NTC) programme, led by the National Physical Laboratory (NPL). It is developing a terrestrial time technology infrastructure designed to provide more resilient and accurate timestamping and frequency signals.

When complete, the NTC programme will enable the UK to move away from reliance on GNSS and deliver resilient time and frequency across the country, providing a new level of confidence in critical national infrastructure. The resilient and reliable time and frequency signals currently under development will accelerate innovation in new technologies such as smart grids, time-critical 5G and 6G applications, factories of the future, smart cities and connected autonomous vehicles.

It is in many ways noteworthy that the atomic clock should be at the centre of the contemporary drive for timing accuracy. This type of clock uses the resonance frequencies of certain atoms, usually caesium or rubidium, to keep time with extreme precision. Now being applied to the most modern of needs, the atomic clock was conceived in 1945, with the first ones put to use in the mid-1950s.

The NTC programme has launched three innovation nodes to focus on the application of accurate and precise timing for new technologies and products for sectors including transport, telecommunications, fintech and quantum.

The nodes represent a partnership between NPL and academia, namely the University of Strathclyde, the University of Surrey and Cranfield University.

Within the next 10 years, we can expect the latest atomic clock technology to underpin a whole new generation of position, navigation and timing technologies. Organisations and industries that rely on accurate timings will need to adopt these. Time, the invisible utility that underpins our digital infrastructure, will have moved into a new era.

The NTC programme

By Caroline Hayes

The National Timing Centre (NTC) programme is the UK’s first nationally distributed time infrastructure that provides secure, reliable, resilient and highly accurate time and frequency data.

The NTC is led by the National Physical Laboratory and has introduced three innovation nodes in partnership with the University of Strathclyde, the University of Surrey, and Cranfield University to accelerate new technologies.

The innovation node at the University of Strathclyde was launched in March 2024.

All three sites at the Universities of Strathclyde, Surrey and Cranfield hosted 26 feasibility and demonstrator projects, funded by Innovate UK, to enable the development of new products and services.

One of these projects is to establish the evidence base for redistributing positioning, navigation and timing data to air- and ground-based autonomous systems in a smart city and to demonstrate the technologies required for safe, reliable and secure autonomous transport.

Another was to develop products and services using a resilient timing reference to accurately measure electricity grid performance.

Electronics Weekly

Electronics Weekly